Artificial Intelligence (AI) continues to be one of the fastest growing sectors at the forefront of technological development, with AI-based concepts such as machine learning and deep learning becoming increasingly popular buzzwords capturing the attention of mainstream media and the public eye.

With this increased attention however, AI is falling under additional scrutiny from national and international regulatory bodies, as the ethical concerns surrounding the use and potential of this technology become more pronounced.

The problem with AI

While AI can be a powerful tool for achieving meaningful positive impacts on the world, it can also pose several ethical risks if it is misused. In their Whitepaper on ethical AI, AI4People—a multi-stakeholder forum launched by the European Parliament—identified four key risks that could be caused by the misuse of AI, these being: the devaluation of human skills, the removal of human responsibility, the reduction of human control and the erosion of human self-determination. In the broader discussion, other fears that circulate the use of AI technology are the loss of human jobs, the increase of media disinformation, the use of AI for malicious reasons, and the prevalence of bias in AI-dependant systems.

This last point is a particularly concerning obstacle to any potential of this technology in situations where the decisions made by AI have serious meaningful impacts on the lives of people, such as in employment scenarios, legal situations or medical diagnoses. We are frequently exposed to headlines reporting racist or sexist outcomes from algorithms developed by leading tech companies—from the offensive mislabelling of people when using facial recognition software, to credit scoring systems that discriminate against ethnic minority groups.

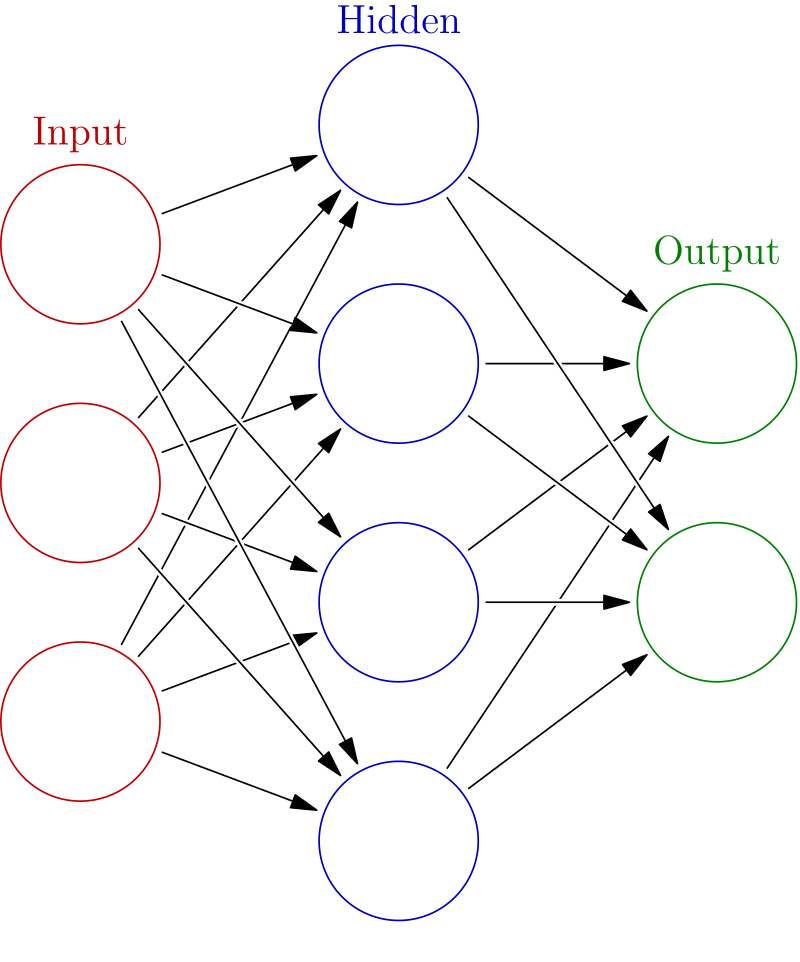

The lack of transparency in machine learning algorithms creates a huge issue of trust in these technologies, especially in deep learning algorithms where the innerworkings of the AI system’s decision-making process are inaccessible, forming an undecipherable “black box”. How can we trust a machine learning algorithm to inform critical decisions, such as diagnosing a patient with a disease, if we cannot know how the algorithm came to that conclusion?

Defining the problem

On 8th April 2019, The High-Level Expert Group on AI, tasked by the European Commission to steer the work of the European AI Alliance, presented Ethics Guidelines for Trustworthy Artificial Intelligence, which put forward a set of 7 key requirements for AI systems to be deemed trustworthy, which are:

- Human agency and oversight

- Technical robustness and safety

- Privacy and data governance

- Transparency

- Diversity, non-discrimination, and fairness

- Societal and environmental well-being

- Accountability

Far more recently, on the 21st of April 2021, the Commission proposed a set of new rules and actions for excellence and trust in Artificial Intelligence. These rules introduced a risk-based approach that classifies AI systems at different gradings of risk, from ones posing an unacceptable risk to those posing a minimal risk. Those posing the highest risk, i.e. a clear threat to the safety, livelihoods and rights of people, will be outright banned, whereas strict obligations will be imposed on AI systems at lower risk levels.

Margrethe Vestager, the Executive Vice-President for a Europe fit for the Digital Age, said: “On Artificial Intelligence, trust is a must, not a nice to have. With these landmark rules, the EU is spearheading the development of new global norms to make sure AI can be trusted. By setting the standards, we can pave the way to ethical technology worldwide and ensure that the EU remains competitive along the way. Future-proof and innovation-friendly, our rules will intervene where strictly needed: when the safety and fundamental rights of EU citizens are at stake.”

Margrethe Vestager, the Executive Vice-President for a Europe fit for the Digital Age, said: “On Artificial Intelligence, trust is a must, not a nice to have. With these landmark rules, the EU is spearheading the development of new global norms to make sure AI can be trusted. By setting the standards, we can pave the way to ethical technology worldwide and ensure that the EU remains competitive along the way. Future-proof and innovation-friendly, our rules will intervene where strictly needed: when the safety and fundamental rights of EU citizens are at stake.”

Solving the problem

The solution therefore, lies in making AI more transparent and trustworthy. While the EU and other legislating bodies may steer the development of AI on the right path through regulation, the solution itself will likely be provided by the private sector, as companies are racing to develop the right technologies that will make deep learning algorithms transparent and explainable, in an emerging sector that is aptly referred to as Explainable AI (XAI).

LIME and SHAP are two popular XAI techniques that are being developed that have been praised as breakthroughs in making black-box models of deep learning algorithms more transparent. In the 2016 paper announcing the tool, the potential for LIME was demonstrated through its success in explaining how an otherwise incomprehensible image classifier managed to identify different objects in an image it was presented with. However, with both LIME and SHAP, increasing concern is being raised on how these tools may be hacked and intentionally manipulated, which would diminish the very trustworthiness the XAI model is attempting to provide the AI system. A recent research paper from Harvard and University of California scholars outlines how variants of these tools are vulnerable to these attacks.

A newly emerging yet highly promising challenger in the field of XAI is UMNAI, a Maltese company with a vast portfolio of patented technologies that has built an extensive toolset which can translate any opaque black-box model into a fully transparent and comprehensible white-box model. Through this technology the user would be able to trace the decision making-process of any algorithm and put a human in the loop where it matters most, to ensure issues such as bias are mitigated and that accountability can be provided. Such technology can be the key to paving the way towards trustworthy human-centric AI systems.

If you are interested in learning more about this topic or have ideas or projects that can contribute to the discussion, please contact us at [email protected].

Alexander Camilleri

Alexander Camilleri

Science and Technology Writer and Project Officer at AcrossLimits